Supercharging

Search

and

Retrieval

for Unstructured

Data

Best-in-class embedding models and rerankers

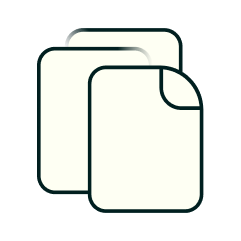

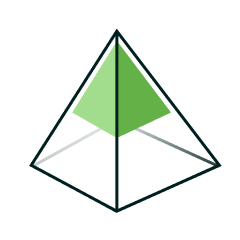

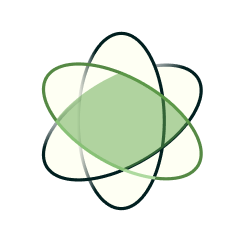

Embeddings and Rerankers Drive RAG Retrieval and Response Quality

Unstructured data

Embedding model

Vector DB

Reranker

Relevant files

LLM

Factual responses with lower costs

A Spectrum of Models for Your Target Use Cases

General-purpose models

Ready for any purpose and language out-of-the-box.

Domain-specific models

Highly optimized for industry-specific data, like finance, legal, and code.

Company-specific models

Fine-tuned librarians for your company’s unique data and lingo.

Powered by Cutting-Edge AI Research and Engineering

High accuracy

Retrieving the most relevant contextual information

Low dimensionality

3x-8x shorter vectors ⇒ cheaper vector search and storage

Low latency

4x smaller model and faster inference with superior accuracy

Cost efficient

2x cheaper inference with superior accuracy

Long-context

Longest commercial context length available (32K tokens)

Modularity

Plug-and-play with any vectorDB and LLM